Edmunds Car News

Latest Car News

Spotlight

Must Watch

Watch Video

Ford Mustang Dark Horse SUPERCHARGED Dyno Test

We put the Dark Horse on the dyno to find out what kind of numbers it's putting down compared to the stock car.

Electric Vehicle News

Podcasts

Market Insights

More car news articles

- Tested: 2025 Audi RS 3 Is Like a Reverse Mullet09/26/2025

- A Road Trip Reveals Our Honda Civic Hybrid's Few Flaws09/25/2025

- The Acura ZDX Is Dead After Just One Model Year09/24/2025

- If You Want a 2026 Toyota Corolla Cross, Get the Hybrid09/24/2025

- The 2026 Volvo EX90 Gets Big Charging Upgrade, Is Now 800-Volt09/23/2025

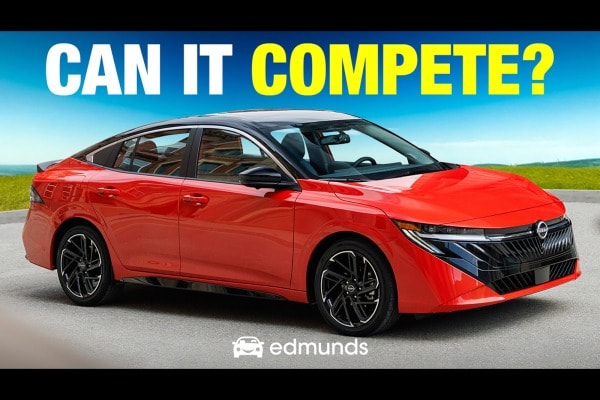

- The 2026 Nissan Sentra Is Completely Unrecognizable09/23/2025

- 2026 Toyota GR Supra Final Edition: Goodbye or Good Riddance?09/23/2025

- 2025 Ford Escape vs. Lincoln Corsair: Is the Luxury Pick Worth It?09/22/2025

- Honda Motocompacto Is the Most Efficient (and Painful) EV We’ve Ever Tested09/22/2025

- After 1,000 Miles in Our Porsche Macan EV, I'm a (Slightly) Bigger Fan09/21/2025